- What Is India’s Rank in Electronics Production? Jan 9, 2026

- Top Companies Behind a Sea of Plastic Mar 24, 2025

- Which Car Brand is Made-in-Nigeria? A Guide for Auto Enthusiasts Apr 21, 2025

- Richest Pharmacist in the World 2025 - Who Holds the Fortune? Oct 10, 2025

- Who is the World's Largest Chemical Manufacturer? - 2025 Update Oct 21, 2025

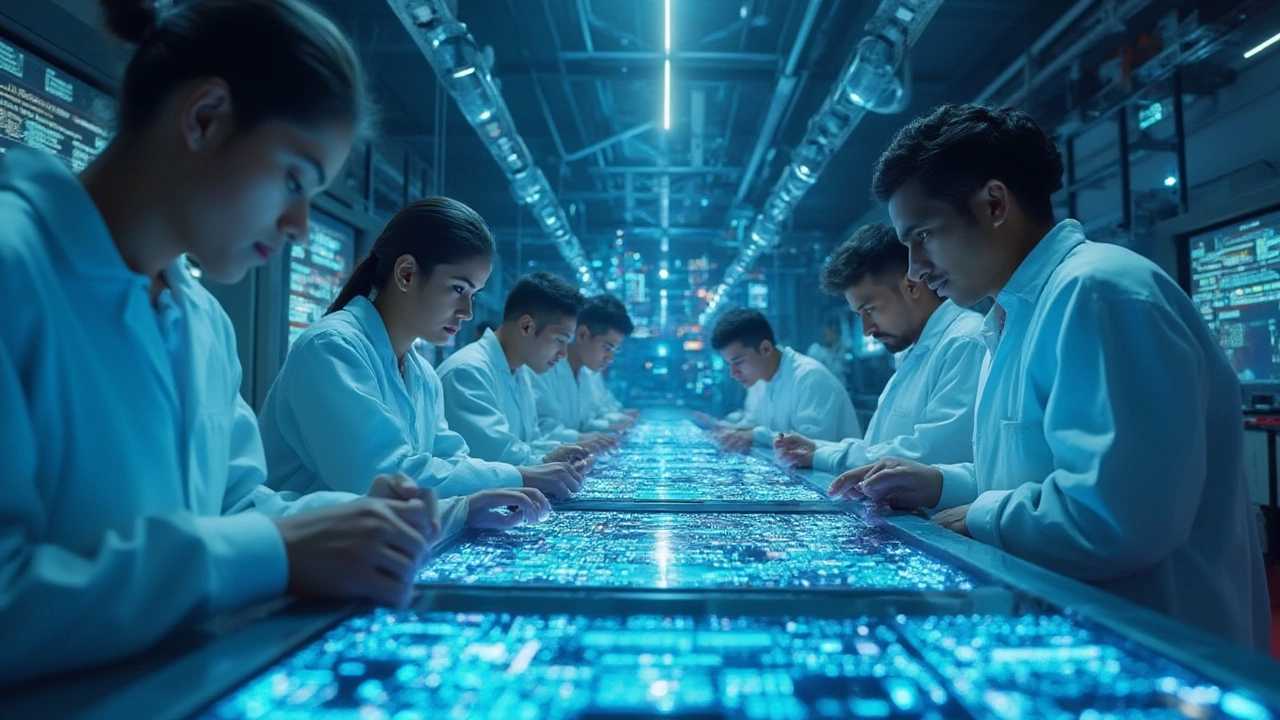

Artificial Intelligence Hardware: What It Is and Why It Counts

Artificial intelligence hardware is the physical stuff that makes AI run fast. Think of chips, boards, and tiny modules that turn data into decisions in real time. Without the right hardware, even the smartest algorithm stays stuck on a slow computer.

Key Components of AI Hardware

Most AI work happens on specialized processors like GPUs, TPUs, and dedicated AI chips. These units handle massive parallel tasks that regular CPUs can’t. Edge devices—like smart cameras or voice assistants—use compact AI modules that process data locally, cutting latency and saving bandwidth.

Memory and storage matter too. High‑speed SRAM and HBM keep the data flowing without bottlenecks. Power management circuits ensure the hardware stays efficient, especially in battery‑run gadgets.

Design Tips for Manufacturers

If you’re building AI hardware, start with energy efficiency. Choose chips that offer the performance you need without draining power. Modular designs let you upgrade parts later without scrapping the whole system.

Supply‑chain reliability is another big factor. Lock in sources for critical components early, and keep backup vendors ready. That way you avoid delays when a single supplier runs out of stock.

Testing should be continuous. Run real‑world workloads on prototypes to spot heat issues or timing glitches before full production. Simple stress tests can save weeks of re‑engineering later.

Finally, think about software integration. The hardware works best when it talks smoothly with frameworks like TensorFlow or PyTorch. Providing clear driver support makes adoption easier for developers.

In short, AI hardware blends fast processors, smart memory, and efficient power designs. By focusing on modularity, supply chain safety, and real‑world testing, manufacturers can deliver devices that truly bring AI to life wherever it’s needed.

AI Chip Manufacturing in India: Who Makes Them and What's Next?

- Aarav Sekhar

- Jul 28, 2025

Discover which companies are making AI chips in India, how India is building its semiconductor industry, and what it means for AI-driven innovation.