- Fastest Growing Pharma Company in India: Latest Rankings, Data & Trends 2025 Jul 7, 2025

- Food Processing Unit Classification: Types and Examples Oct 25, 2025

- India's Pharma Manufacturing Companies: Count, Growth, and Industry Insights Jun 29, 2025

- Are Any Cars Made in China Sold in the US? Oct 30, 2025

- Who is the World's Largest Chemical Manufacturer? - 2025 Update Oct 21, 2025

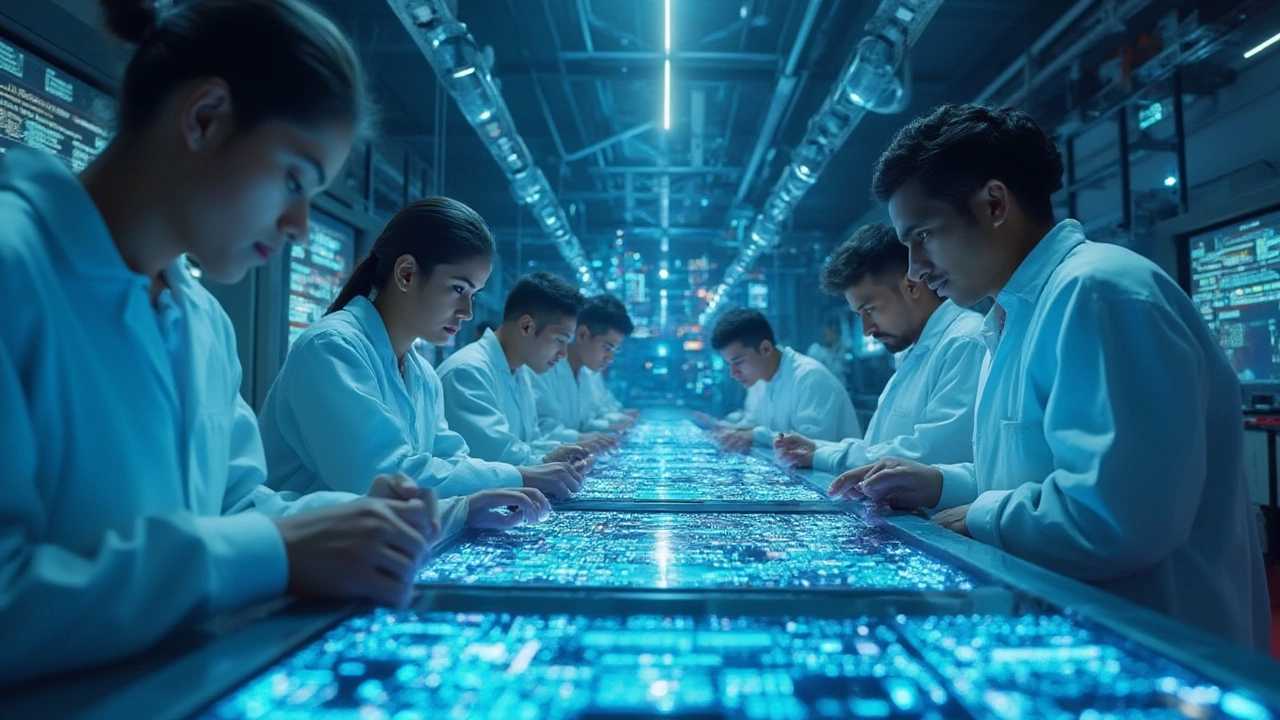

AI processors – What They Are and Why They Matter

Ever wondered why your phone can unlock itself in a flash or why online videos get smarter recommendations? The answer is simple: AI processors. These are chips built specifically to handle the heavy math behind machine‑learning models, and they’re popping up in everything from wearables to massive cloud servers.

Traditional CPUs are great at handling many different tasks, but they’re not optimized for the parallel calculations that neural networks need. AI processors solve that by packing thousands of tiny cores that can crunch data simultaneously. The result? Faster, more efficient AI that doesn’t drain your battery or cost a fortune in electricity.

Types of AI processors you’ll see around

When you hear the term “AI chip,” people usually think of three main families:

- GPUs (Graphics Processing Units): Originally made for gaming, GPUs excel at parallel work. They’re the go‑to choice for training big models because they can move huge amounts of data quickly.

- TPUs (Tensor Processing Units) and ASICs (Application‑Specific Integrated Circuits): These are custom‑built for specific math operations used in deep learning. Google’s TPU, for example, cuts training time dramatically for certain workloads.

- NPUs (Neural Processing Units) and edge AI chips: Designed for phones, cameras, and IoT devices, they bring AI inference (the part where the model makes predictions) right to the device, keeping data private and reducing latency.

Each type has its sweet spot. GPUs dominate research labs, TPUs shine in data‑center inference, and NPUs power the smart features you already use daily.

Key features to look for

If you’re choosing an AI processor for a project, keep an eye on three practical specs:

- Throughput: Measured in TOPS (tera‑operations per second), this tells you how many calculations the chip can perform every second. Higher numbers mean faster model execution.

- Power efficiency: For mobile or edge devices, you want more work per watt. Low‑power chips let you run AI features without blowing through the battery.

- Flexibility: Some chips lock you into a specific framework (like TensorFlow). A flexible processor supports multiple toolchains, making life easier for developers.

In practice, you’ll often trade off between raw speed and energy use. A data‑center might pick a power‑hungry GPU for max performance, while a smartwatch settles for an ultra‑efficient NPU that can still run voice commands.

Another practical tip: look for chips that come with good software support. A solid SDK, pre‑optimized libraries, and community forums can shave weeks off development time.

AI processors are also shaping entire industries. In automotive, dedicated AI chips enable real‑time object detection for self‑driving cars. In healthcare, they accelerate image analysis, helping doctors spot problems faster. Even manufacturing floors are adding edge AI chips to monitor equipment health in real time, reducing downtime.

Looking ahead, the trend is clear: more AI will move to the edge. That means smaller, more power‑efficient chips that can run sophisticated models without needing a constant cloud connection. Expect to see more hybrid designs that combine a small CPU core with an NPU, giving devices the best of both worlds.

Bottom line: AI processors are the engines behind the smart features we rely on. Whether you’re a hobbyist building a home‑automation gadget or a startup scaling a cloud service, picking the right chip depends on speed, power, and software support. Understanding these basics helps you make a choice that fits your needs without overpaying or over‑engineering.

AI Chip Manufacturing in India: Who Makes Them and What's Next?

- Aarav Sekhar

- Jul 28, 2025

Discover which companies are making AI chips in India, how India is building its semiconductor industry, and what it means for AI-driven innovation.